All Blogs

Geo-economic impacts of the coronavirus: Global Supply Chains (Part I)

This two part blog post looks at the geo-economic impacts of the coronavirus by examining crucial impacts of developments in China. Part I looks at the impact of China's shutdown on global supply chains and part two, considers the implications for the future of 5G technology.

Unlock = Open, not Choked!

Don't let a virus stall initiatives and weaken the economy.

The debate over internet governance and cyber crimes: West vs the rest?

The post looks at the two models proposed for internet governance and the role of cyber crimes in shaping the debate. In this context, it will also critically analyze the Budapest Convention (the “convention”) and the recently proposed Russian Resolution (the “resolution”), and the strategies adopted in each to deal with the menace of cybercrimes. It will also briefly discuss India’s stances on these issues.

Freedom of Expression in India: Key Research and Findings

Over the last two years, CIS has carried out critical research on the issue of freedom of expression in India. We have continued our work on intermediary liability, as well as expanded our expertise to emerging areas, like online extreme speech. Researchers have also closely tracked developments around internet shutdowns, and the impact of social media and data on democratic processes in the country.

Kick-start the economy with cash flow

Pay government dues and enable high-speed connectivity

Essay: Watching Corona or Neighbours? - Introducing ‘Lateral Surveillance’ during COVID-19

Surveillance is already suspected to have become the ‘new normal’ considering the extensive amounts of money that is being invested by governments around the globe. The only way out of this pandemic is to take a humane approach to surveillance wherein the discriminatory tendencies of the people while spreading information about those infected are factored in to prevent excessive harm.

High priced restrictive entry, distorted regulations make India's telecom sector unattractive

In the wake of COVID-19, the PM has emphasized the opportunity to attract FDI by transforming the business environment and urged policy makers to ease the way business is done.

Short on Spectrum: Need for an enabling policy and regulatory environment

This article first appeared Tele.net in March 2020.

Internet and telecom are the key drivers of economic growth. India has the potential to become the largest internet-using country after China, with current estimates showing that five to seven million mobile internet users are being added and about 35 million smartphone shipments happen every quarter in the country. The huge demand for mobile broadband requires adequate spectrum and capacity in radio access networks. Further, due to poor fibre connectivity, estimated at only 15-20 per cent of the existing towers, backhaul spectrum availability is critical.

Given the growth driver of telecom and internet in India is mobile, a critical resource for sector growth is spectrum – the only natural resource that is equally endowed across all nations. However, in India, operators and citizens are spectrum starved. There is a need to introspect why that is so. Further, with an economy on the rise that places ever higher demands on telecom services, the sector should have a growth trajectory that surpasses others. However, the Indian telecom sector story is that of the proverbial killing of the goose that lays golden eggs.

In the current scenario, there are three private sector operators – Bharti Airtel, Vodafone Idea and Reliance Jio – and public sector entities Bharat Sanchar Nigam Limited (BSNL) and Mahanagar Telephone Nigam Limited (MTNL). BSNL and MTNL have a declining market share of 10.5 per cent and hardly any 4G services as they were not allocated spectrum for it. As of November 2019, the teledensity stood at nearly at 88.9 per cent, urban being 157.3 per cent and rural being 56.7 per cent. Mobile services contributed to nearly 98 per cent of the urban and nearly 100 per cent of the rural teledensity.

Vodafone Idea and Bharti Airtel are under tremendous financial stress, caused by accumulated losses of more than Rs 2.2 trillion that include due payments of revenue share (based on adjusted gross revenue [AGR]) due as a part of their licence fee, penalty and interest payments for delayed payments to the Department of Telecommunications (DoT), high prices paid in auctions for spectrum in 2016 and business disruption following the entry of Reliance Jio. To put the numbers in perspective, the entire revenue for the sector was Rs 2.11 trillion for 2017-18. Given the current poor financial situation of the private operators, the government had to delay the auction of 5G spectrum as most private sector operators are not in a position to bid for new spectrum and also invest in the new networks required. A major concern for policymakers is that there could be a significant reduction in competitiveness and consumer choice as there is a possibility that Vodafone Idea may exit the sector, given its weak financial state. Since BSNL and MTNL’s market share is very low and is not likely to grow significantly, the telecom sector could effectively have a duopoly.

What has caused the telecom sector to be in this state? While the private sector’s strategies and actions have contributed to the current situation, a major portion of the responsibility is due to the restrictive policy and regulatory environment in which the sector has operated. While the private sector must contribute to paying its dues for using a public resource (spectrum or right of way) for private gains, overly restrictive licence conditions, a constrictive environment for making more spectrum available from DoT and infeasible pricing of spectrum recommended by the Telecom Regulatory Authority of India (TRAI) could make the sector unviable. I would like to highlight some key concerns that could be addressed in the short term.

AGR

This is exemplified in the basis for AGR calculation that included total income from both activities that require a licence such as provision of telecom services and also that do not such as interest income and equipment sale. AGR forms the basis of licence fee and spectrum usage charges (SUC). While the licence explicitly identified all components of AGR and telecom operators accepted the licence conditions when they signed the licence, and must bear the consequences of the same, it is difficult to understand the logic of DoT in coming out with such conditions. It is true that the government has a sovereign right to specify any condition, but an enlightened and long-term view would indicate that it is not in the interest of the sector to have restrictive conditions.

Another such example is the legacy of SUC that originated when service and spectrum licences were bundled. Since these have been unbundled, there is no longer any requirement of SUC, which constitutes a significant outflow (3-5 per cent), as operators pay a licence fee for the spectrum. This is double charging and unfair. While DoT may be concerned that the removal of SUC would lead to lower revenue for the government, new services, higher adoption of existing services and the corresponding increase in service tax and corporate tax should more than compensate for it. Further, government dues on licence fee and SUC have come down, as the AGR of two of the private operators has come down.

Spectrum availability

Compared to several other countries, India has a relatively constrained supply of spectrum for commercial use, both in the unlicensed and licensed bands. In a spectrum-starved country such as India, where each operator gets about one-fourth of the licensed spectrum as compared to operators in other countries, making more bands both licensed and unlicensed bands available unleashing the potential of unlicensed spectrum is important.

Licensed spectrum

Often operators have not bid when licensed spectrum was made available in auctions. This has been due to the arbitrary and high reserve prices set by TRAI. These are high in comparison to those in several developed countries. Lower propensity to pay and higher competition levels in India (with a peak of 12-14 operators per service area until 2012 and nearly five operators until the recent spate of mergers), and lower amount of spectrum per operator is a recipe for financial stress of the companies. TRAI has a peculiar and unique practice of setting the reserve price in any auction the same as the winning price in the previous auction and when it is a new band or recent auctions have not been held in existing bands, some arbitrary multipliers on the nearest band’s winning price are used. Despite the failure of the 2016 auctions, where bands that are globally in high demand had no bids, TRAI once again set very high reserve prices for the 3.3-3.6 GHz in its recommendations in 2018. After several iterations between DoT and TRAI on reconsideration of prices, TRAI announced that it had reduced them by 43 per cent. Even after this arbitrary reduction, prices continued to be much higher than those in the developed countries where such spectrum had been auctioned.

Unlicensed spectrum

India has around 660 MHz of spectrum available for unlicensed use, spread across various spectrum bands. In comparison to other countries (the US 15,403 MHz, the UK 15,404 MHz, China 15,360 MHz, Japan 15,377 MHz and Brazil 15,360 MHz), this is significantly less. There is a perceived reluctance on the part of DoT to make more spectrum licence-exempt, largely because of a narrow interpretation of the Supreme Court judgment (2012). According to this perspective, the Supreme Court had mandated the allocation of spectrum only through auctions. However, a more informed reading and interpretation indicate that the Supreme Court, in its judgment, had referred to spectrum allocation as a policy decision within the purview of the executives. In a subsequent Presidential reference made by the government, the apex court had elaborated that the scope of the judgment was limited to 2G spectrum distributed on a first come, first served basis during 2007-08. Further, any alienation of scarce public resources needs to be done in a fair and transparent manner, backed by a social or welfare purpose. In the case of unlicensed spectrum, since there is no need to allocate spectrum specifically to any user, there is no contention regarding the choice of the allocation method. By not adopting clarifications in the judgment and the Presidential reference, DoT has delayed spectrum allocation in different bands, including unlicensed bands.

Conclusion

The above examples indicate the restrictive policy regime that has characterised private entry into the sector since its inception. There have been instances in the past, such as a shift from a one-time entry fee to a revenue sharing regime or moving to a technology-and service-neutral licence, where the government has shown how an enlightened policy perspective that could enable services to take off. A similar approach that views the private sector as a partner in growth (with relevant provisions to prevent abuse) and enables competition to bring in consumer choice, innovation and reasonable pricing will see services take off.

While the private sector must take a long-term perspective of what drives the growth in the sector and not just seek redressal through short-term measures, the onus on the government is much higher. It is the one that sets the agenda and has a vision of what kind of development citizens have a right to. So far, given the current state of the telecom sector, the government needs to push for a long-term enlightened policy agenda that will provide world-class services to its citizens and increase India’s competitiveness. I also hope that policymakers will recognise that 512 kbps should not qualify as broadband speed (which it is, by current definitions).

If we aim low, we cannot achieve high growth.

What BharatNet can learn from the rural-roads scheme: involve states, local bodies, private sector

BharatNet appears to be foundering. It has a top-down approach with little involvement of the states, implementing agencies, or vendors. In contrast, PMGSY, considered a success, works in a bottom-up manner. Its implementation mechanism involves a dedicated agency from the user ministry, PWD, rural-road development agencies, gram panchayats, private operators, etc.

Ethics and Human Rights Guidelines for Big Data for Development Research

This is a four-part review of guideline documents for ethics and human rights in big data for development research. This research was produced as part of the Big Data for Development network supported by International Development Research Centre, Canada

A Compilation of Research on the Gig Economy

Over the past year, researchers at CIS have been studying gig economies and gig workers in India. Their work has involved consultative discussions with domestic workers, food delivery workers, taxi drivers, trade union leaders, and government representatives to document the state of gig work in India, and highlight the concerns of gig workers. The imposition of a severe lockdown in India in response to the outbreak of COVID-19 has left gig workers in precarious positions. Without the privilege of social distancing, these workers are having to contend with a drastic reduction in income, while also placing themselves at heightened health risks.

From Health and Harassment to Income Security and Loans, India's Gig Workers Need Support

Deemed an 'essential service' by most state governments, and thereby exempt from temporary suspension during the COVID-19 lockdown, food, groceries and other essential commodities have continued to be delivered by e-commerce companies and on-demand services. Actions to protect workers, who are taking on significant risks, have been far less forthcoming than those for customers. Zothan Mawii (Tandem Research), Aayush Rathi (CIS) and Ambika Tandon (CIS) spoke with the leaders of four workers' unions and labour researchers to identify recommended actions that public agencies and private companies may undertake to better support the urgent needs of gig workers in India.

COVID-19 Charter Of Recommendations on Gig Work

Tandem Research and the Centre for Internet and Society organised a webinar on 9 April 2020, with unions representing gig workers and researchers studying labour rights and gig work, to uncover the experiences of gig workers during the lockdown. Based on the discussion, the participants of the webinar have drafted a set of recommendations for government agencies and platform companies to safeguard workers’ well being. Here are excerpts from this charter of recommendation shared with multiple central and state government agencies and platforms companies.

Rumours, Misinformation and Self-Verification of Facts in the Age of COVID-19

Efforts taken by the government or social media platforms can only be realised if an individual asks herself -- 'what can I do to verify this piece of information'?

Zothan Mawii - COVID-19 and Relief Measures for Gig Workers in India

CIS is cohosted a webinar with Tandem Research on the impact of the COVID-19 response on the gig economy on 9 April 2020. It was a closed door discussion between representatives of workers' unions, labour activists, and researchers working on gig economy and workers' rights to highlight the demands of workers' groups in the transport, food delivery and care work sectors. We saw this as an urgent intervention in light of the disruption to the gig economy caused by the nationwide lockdown to limit proliferation of COVID-19. This is a summary of the discussions that took place in the webinar authored by Zothan Mawii, a Research Fellow at Tandem Research.

Why should we care about takedown timeframes?

The issue of content takedown timeframe - the time period an intermediary is allotted to respond to a legal takedown order - has received considerably less attention in conversations about intermediary liability. This article examines the importance of framing an appropriate timeframe towards ensuring that speech online is not over-censored, and frames recommendations towards the same.

After the Lockdown

This post was first published in the Business Standard, on April 2, 2020.

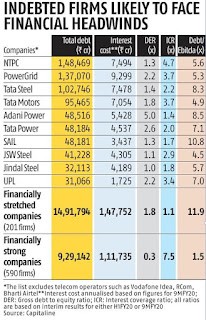

Source: Krishna Kant: "Coronavirus shutdown puts Rs 15-trillion debt at risk, to impact finances", BS, March 30, 2020:

For the longer term, a fundamental reconsideration for allocating resources is needed through coherent, orchestrated policy planning and support. What the government can do as a primary responsibility, besides ensuring law and order and security, is to develop our inadequate and unreliable infrastructure, including facilities and services that enable efficient production clusters, their integrated functioning, and skilling. For instance, Apple’s recent decision against moving iPhone production from China to India was reportedly because similar large facilities (factories of 250,000) are not feasible here, and second, our logistics are inadequate. Such considerations should be factored into our planning, although Apple may well have to revisit the very sustainability of the concept of outsize facilities that require the sort of repressive conditions prevailing in China. However, we need not aim for building unsustainable mega-factories. Instead, a more practical approach may be to plan for building agglomerations of smaller, sustainable units, that can aggregate their activity and output effectively and efficiently. Such developments could form the basis of numerous viable clusters, and where possible, capitalise on existing incipient clusters of activities. Such infrastructure needs to be extended to the countryside for agriculture and allied activities as well, so that productivity increases with a change from rain-fed, extensive cultivation to intensive practices, with more controlled conditions.

The automotive industry, the largest employer in manufacturing, provides an example for other sectors. It was a success story like telecom until recently, but is now floundering, partly because of inappropriate policies, despite its systematic efforts at incorporating collaborative planning and working with the government. It has achieved the remarkable transformation of moving from BS-IV to BS-VI emission regulations in just three years, upgrading by two levels with an investment of Rs 70,000 crore, whereas European companies have taken five to six years to upgrade by one level. This has meant that there was no time for local sourcing, and therefore heavy reliance on global suppliers, including China. While the collaborative planning model adopted by the industry provides a model for other sectors, the question here is, what now. In a sense, it was not just the radical change in market demand with the advent of ridesharing and e-vehicles, but also the government’s approach to policies and taxation that aggravated its difficulties.

India’s ‘Self-Goal’ in Telecom

This post was first published in the Business Standard, on March 5, 2020.

The government apparently cannot resolve the problems in telecommunications. Why? Because the authorities are trying to balance the Supreme Court order on Adjusted Gross Revenue (AGR), with keeping the telecom sector healthy, while safeguarding consumer interest. These irreconcilable differences have arisen because both the United Progressive Alliance and the National Democratic Alliance governments prosecuted unreasonable claims for 15 years, despite adverse rulings! This imagined “impossible trinity” is an entirely self-created conflation.

If only the authorities focused on what they can do for India’s real needs instead of tilting at windmills, we’d fare better. Now, we are close to a collapse in communications that would impede many sectors, compound the problem of non-performing assets (NPAs), demoralise bankers, increase unemployment, and reduce investment, adding to our economic and social problems.

Is resolving the telecom crisis central to the public interest? Yes, because people need good infrastructure to use time, money, material, and mindshare effectively and efficiently, with minimal degradation of their environment, whether for productive purposes or for leisure. Systems that deliver water, sanitation, energy, transport and communications support all these activities. Nothing matches the transformation brought about by communications in India from 2004 to 2011 in our complex socio-economic terrain and demography. Its potential is still vast, limited only by our imagination and capacity for convergent action. Yet, the government’s dysfunctional approach to communications is in stark contrast to the constructive approach to make rail operations viable for private operators.

India’s interests are best served if people get the services they need for productivity and wellbeing with ease, at reasonable prices. This is why it is important for government and people to understand and work towards establishing good infrastructure.

What the Government Can Do

An absolute prerequisite is for all branches of government (legislative, executive, and judicial), the press and media, and society, to recognise that all of us must strive together to conceptualise and achieve good infrastructure. It is not “somebody else’s job”, and certainly not just the Department of Telecommunications’ (DoT’s). The latter cannot do it alone, or even take the lead, because the steps required far exceed its ambit.

Act Quickly

These actions are needed immediately:

First, annul the AGR demand using whatever legal means are available. For instance, the operators could file an appeal, and the government could settle out of court, renouncing the suit, accepting the Telecom Disputes Settlement and Appellate Tribunal (TDSAT) ruling of 2015 on AGR.

Second, issue an appropriate ordinance that rescinds all extended claims. Follow up with the requisite legislation, working across political lines for consensus in the national interest.

Third, take action to organise and deliver communications services effectively and efficiently to as many people as possible. The following steps will help build and maintain more extensive networks with good services, reasonable prices, and more government revenues.

Enable Spectrum Usage on Feasible Terms

Wireless regulations

It is infeasible for fibre or cable to reach most people in India, compared with wireless alternatives. Realistically, the extension of connectivity beyond the nearest fibre termination point is through wireless middle-mile connections, and Wi-Fi for most last-mile links. The technology is available, and administrative decisions together with appropriate legislation can enable the use of spectrum immediately in 60GHz, 70-80GHz, and below 700MHz bands to be used by authorised operators for wireless connectivity. The first two bands are useful for high-capacity short and medium distance hops, while the third is for up to 10 km hops. The DoT can follow its own precedent set in October 2018 for 5GHz for Wi-Fi, i.e., use the US Federal Communications Commission regulations as a model.1 The one change needed is an adaptation to our circumstances that restricts their use to authorised operators for the middle-mile instead of open access, because of the spectrum payments made by operators. Policies in the public interest allowing spectrum use without auctions do not contravene Supreme Court orders.

Policies: Revenue sharing for spectrum

A second requirement is for all licensed spectrum to be paid for as a share of revenues based on usage as for licence fees, in lieu of auction payments. Legislation to this effect can ensure that spectrum for communications is either paid through revenue sharing for actual use, or is open access for all Wi-Fi bands. The restricted middle-mile use mentioned above can be charged at minimal administrative costs for management through geo-location databases to avoid interference. In the past, revenue-sharing has earned much more than up-front fees in India, and rejuvenated communications.2 There are two additional reasons for revenue sharing. One is the need to manufacture a significant proportion of equipment with Indian IPR or value-added, to not have to rely as much as we do on imports. This is critical for achieving a better balance-of-payments, and for strategic considerations. The second is to enable local talent to design and develop solutions for devices for local as well as global markets, which is denied because it is virtually impossible for them to access spectrum, no matter what the stated policies might claim.

Policies and Organisation for Infrastructure Sharing

Further, the government needs to actively facilitate shared infrastructure with policies and legislation. One way is through consortiums for network development and management, charging for usage by authorised operators. At least two consortiums that provide access for a fee, with government’s minority participation in both for security and the public interest, can ensure competition for quality and pricing. Authorised service providers could pay according to usage.

Press reports of a consortium approach to 5G where operators pay as before and the government “contributes” spectrum reflect seriously flawed thinking.3 Such extractive payments with no funds left for network development and service provision only support an illusion that genuine efforts are being made to the ill-informed, who simultaneously rejoice in the idea of free services while acclaiming high government charges (the two are obviously not compatible).

Instead of tilting at windmills that do not serve people’s needs while beggaring their prospects, commitment to our collective interests requires implementing what can be done with competence and integrity.

Shyam (no space) Ponappa at gmail dot com

1. https://dot.gov.in/sites/default/files/2018_10_29%20DCC.pdf

2. http://organizing-india.blogspot.in/2016/04/ breakthroughs- needed-for-digital-india.html

3. https://www.business-standard.com/article/economy-policy/govt-considering-spv-with-5g-sweetener-as-solution-to-telecom-crisis-120012300302_1.html

CIS Comments on 'Pre Draft' of the Report of the UN Open Ended Working Group

CIS submitted comments on the 'pre draft' report of the United Nations Open Ended Working Group on developments in the field of information and telecommunications in the context of international security.

CIS Comments on NODE Consultation Paper

CIS submitted comments in response to MEITY's Consultation Paper on National Open Digital Ecosystems (NODEs) The comments were authored by Aman Nair, Elonnai Hickok and Arindrajit Basu with inputs from Gurshabad Grover.