What is the problem with ‘Ethical AI’? An Indian Perspective

On 22 May 2019, the OECD member countries adopted the OECD Council Recommendation on Artificial Intelligence. The Principles, meant to provide an “ethical framework” for governing Artificial Intelligence (AI), were the first set of guidelines signed by multiple governments, including non-OECD members: Argentina, Brazil, Colombia, Costa Rica, Peru, and Romania.

The article by Arindrajit Basu and Pranav M.B. was published by cyberBRICS on July 17, 2019.

This was followed by the G20 adopted human-centred AI Principles on June 9th. These are the latest in a slew of (at least 32!) public, and private ‘Ethical AI’ initiatives that seek to use ethics to guide the development, deployment and use of AI in a variety of use cases. They were conceived as a response to a range of concerns around algorithmic decision-making, including discrimination, privacy, and transparency in the decision-making process.

In India, a noteworthy recent document that attempts to address these concerns is the National Strategy for Artificial Intelligence published by the National Institution for Transforming India, also called NITI Aayog, in June 2018. As the NITI Aayog Discussion paper acknowledges, India is the fastest growing economy with the second largest population in the world and has a significant stake in understanding and taking advantage of the AI revolution. For these reasons the goal pursued by the strategy is to establish the National Program on AI, with a view to guiding the research and development in new and emerging technologies, while addressing questions on ethics, privacy and security.

While such initiatives and policy measures are critical to promulgating discourse and focussing awareness on the broad socio-economic impacts of AI, we fear that they are dangerously conflating tenets of existing legal principles and frameworks, such as human rights and constitutional law, with ethical principles – thereby diluting the scope of the former. While we agree that ethics and law can co-exist, ‘Ethical AI’ principles are often drafted in a manner that posits as voluntary positive obligations various actors have taken upon themselves as opposed to legal codes they necessarily have to comply with.

To have optimal impact, ‘Ethical AI’ should serve as a decision-making framework only in specific instances when human rights and constitutional law do not provide a ready and available answer.

Vague and unactionable

Conceptually, ‘Ethical AI’ is a vague set of principles that are often difficult to define objectively. In this perspective, academics like Brett Mittelstadt of the Oxford Internet Institute argues that unlike in the field of medicine – where ethics has been used to design a professional code, ethics in AI suffers from four core flaws. First, developers lack a common aim or fiduciary duty to a consumer, which in the case of medicine is the health and well-being of the patient. Their primary duty lies to the company or institution that pays their bills, which often prevents them from realizing the extent of the moral obligation they owe to the consumer.

The second is a lack of professional history which can help clarify the contours of well-defined norms of ‘good behaviour.’ In medicine, ethical principles can be applied to specific contexts by considering what similarly placed medical practitioners did in analogous past scenarios. Given the relative nascent emergence of AI solutions, similar professional codes are yet to develop.

Third is the absence of workable methods or sustained discourse on how these principles may be translated into practice. Fourth, and we believe most importantly, in addition to ethical codes, medicine is governed by a robust and stringent legal framework and strict legal and accountability mechanisms, which are absent in the case of ‘Ethical AI’. This absence gives both developers and policy-makers large room for manoeuvre.

However, such focus on ethics may be a means of avoiding government regulation and the arm of the law. Indeed, due to its inherent flexibility and non-binding nature, ethics can be exploited as a piecemeal red herring solution to the problems posed by AI. Controllers of AI development are often profit-driven private entities, that gain reputational mileage by using the opportunity to extensively deliberate on broad ethical notions.

Under the guise of meaningful ‘self-regulation’, several organisations publish internal ‘Ethical AI’ guidelines and principles, and fund ethics research across the globe. In doing so, they occlude the shackles of binding obligation and deflect from attempts at tangible regulation.

Comparing Law to Ethics

This is in contrast to the well-defined jurisprudence that human rights and constitutional law offer, which should serve as the edifice of data-driven decision making in any context.

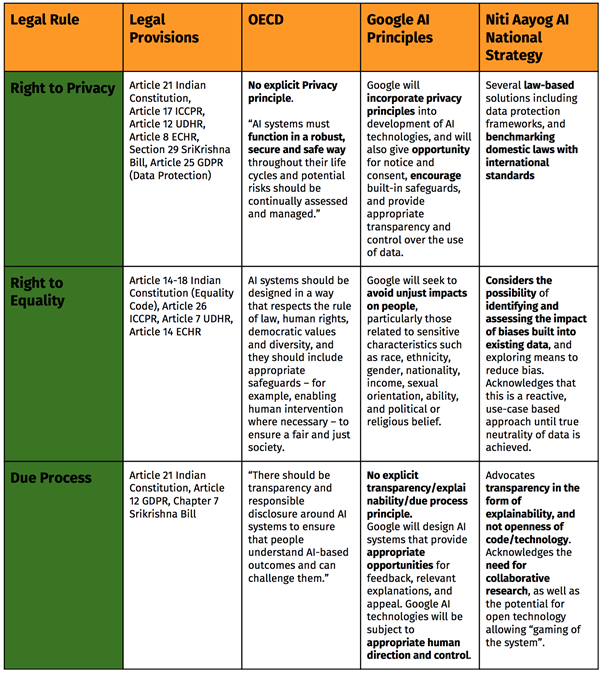

In the table below, we try to explain this point by looking at how three core fundamental rights enshrined both in our constitution and human rights instruments across the globe-right to privacy, right to equality/right against discrimination and due process-find themselves captured in three different sets of ‘Ethical AI frameworks.’ One of these inter-governmental (OECD), one devised by a private sector actor (‘Google AI’) and one by our very own, NITI AAYOG.

With the exception of certain principles,most ‘Ethical AI’ principles are loosely worded as ‘‘seek to avoid’, ‘give opportunity for’, or ‘encourage’. A notable exception is the NITI AAYOG’s approach to protecting privacy in the context of AI. The document explicitly recommends the establishment of a national data protection framework for data protection, sectoral regulations that apply to specific contexts with the consideration of international standards such as GDPR as benchmarks. However, it fails to reference available constitutional standards when it discusses bias or explainability.

Several similar legal rules that have been enshrined in legal provisions -outlined and elucidated through years of case law and academic discourse – can be utilised to underscore and guide AI principles. However, existing AI principles do not adequately articulate how the legal rule can actually be applied to various scenarios by multiple organisations.

We do not need a new “Law of Artificial Intelligence” to regulate this space. Judge Frank Easterbrook’s famous 1996 proclamation on the ‘Law of the Horse’ through which he opposed the creation of a niche field of ‘cyberspace law’ comes to mind. He argued that a multitude of legal rules deal with ‘horses’, including the sale of horses, individuals kicked by horses, and with the licensing and racing of horses. Like with cyberspace, any attempt to arrive at a corpus of specialised ‘law of the horse’ would be shallow and ineffective.

Instead of fidgeting around for the next shiny regulatory tool, industry, practitioners, civil society and policy makers need to get back to the drawing board and think about applying the rich corpus of existing jurisprudence to AI governance.

What is the role for ‘Ethical AI?’

What role can ‘ethical AI’ then play in forging robust and equitable governance of Artificial Intelligence? As it does in all other societal avenues, ‘ethical AI’ should serve as a framework for making legitimate algorithmic decisions in instances where law might not have an answer. An example of such a scenario is the Project Maven saga – where 3,000 Google employees signed a petition opposing Google’s involvement with a US Department of Defense project by claiming that Google should not be involved in “the business of war.” There is no law-international or domestic that suggests that Project Maven-which was designed to study battlefield imagery using AI, was illegal. However, the debate at Google proceeded on ethical grounds and on the application of the ‘Ethical AI’ principles to this present context.

We realise the importance of social norms and mores in carving out any regulatory space. We also appreciate the role of ethics in framing these norms for responsible behaviour. However, discourse across civil society, academic, industry and government circles all across the globe needs to bring law back into the discussion as a framing device. Not doing so risks diluting the debate and potential progress to a set of broad, unactionable principles that can easily be manipulated for private gain at the cost of public welfare.